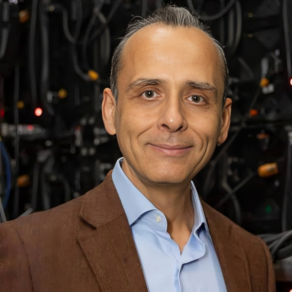

Xavier Bresson

[intermediate/advanced] Graph Transformers, Graph Generative Models and Large Language Models

Summary

This series of three lectures explores cutting-edge topics in graph machine learning. The first lecture delves into graph transformers, a generalization of transformer models for arbitrary graph structures. The second lecture introduces advanced techniques for generating new graphs, including diffusion models. The final lecture focuses on integrating graph information with large language models (LLMs). If time permits, each session will include practical exercises using PyTorch.

Syllabus

Lecture 1: Graph Transformers

- Overview of graph network architectures

- Graph positional encoding

- Transformers for sequences

- Graph transformers

- Graph ViT and MLP-Mixer

Lecture 2: Graph Generative Models

- Motivation with applications in molecular science

- Graph variational auto-encoders (VAEs)

- Graph Generative Adversarial Networks (GANs)

- Graph generation using diffusion models

Lecture 3: Graph Networks and Large Language Models

- LLMs are all you need: shifting paradigms

- Farewell to GNNs: transitioning to hybrid methods

- Review of LLMs and GNNs for text-based reasoning

- Leveraging LLMs for GNN reasoning tasks

- Enhancing LLMs using graph reasoning

References

Dwivedi, Bresson, A Generalization of Transformer Networks to Graphs, 2020.

He, Hooi, Laurent, Perold, LeCun, Bresson, A Generalization of ViT/MLP-Mixer to Graphs, 2022.

Kingma, Welling, Auto-Encoding Variational Bayes, 2013.

Goodfellow et al., Generative Adversarial Networks, 2014.

Ho, Jain, Abbeel, Denoising Diffusion Probabilistic Models, 2020.

He, Bresson, Laurent, Perold, LeCun, Hooi, Harnessing Explanations: LLM-to-LM Interpreter for Enhanced Text-Attributed Graph Representation Learning, 2023.

He, Tian, Sun, Chawla, Laurent, LeCun, Bresson, Hooi, G-Retriever: Retrieval-Augmented Generation for Textual Graph Understanding and Question Answering, 2024.

Pre-requisites

Linear algebra, Deep learning fundamentals, Basic graph theory, Python programming, PyTorch familiarity.

Short bio

Xavier Bresson is an Associate Professor in the Department of Computer Science at the National University of Singapore (NUS). His research focuses on Graph Deep Learning, a new framework that combines graph theory and neural networks to tackle complex data domains. He received the USD 2M NRF Fellowship, the largest individual grant in Singapore, to develop this new framework. He was also awarded several research grants in the U.S. and Hong Kong. He co-authored one of the most cited works in this domain (10th most cited paper at NeurIPS) and has significantly contributed to mature these emerging techniques. He has organized several conferences, workshops and tutorials on graph deep learning such as the IPAM’23 workshops on “Learning and Emergence in Molecular Systems”, the IPAM’23’21 workshops on “Deep Learning and Combinatorial Optimization”, the MLSys’21 workshop on “Graph Neural Networks and Systems”, the IPAM’19 and IPAM’18 workshops on “New Deep Learning Techniques”, and the NeurIPS’17, CVPR’17 and SIAM’18 tutorials on “Geometric Deep Learning on Graphs and Manifolds”. He has been a regular invited speaker at universities and companies to share his work. He has also been a speaker at the NeurIPS’22, KDD’21’23, AAAI’21 and ICML’20 workshops on “Graph Representation Learning”, and the ICLR’20 workshop on “Deep Neural Models and Differential Equations”.